New brain-computer interface 'reads' your innermost thoughts and translates them into text

You read words with your eyes, but you also hear them with a voice in your head. That inner speech isn’t just a feeling. It leaves a clear footprint in the brain that can be mapped to text on a screen.

For people who can’t speak because of paralysis or other conditions, that shift could open the door to faster, more natural conversation.

Scientists have now identified brain activity patterns linked to inner speech and decoded them into text with a 74% accuracy rate.

Published in the journal Cell, the work could enable brain-computer interface (BCI) systems to help people who cannot speak out loud communicate by silently thinking a password to activate the system.

“This is the first time we’ve managed to understand what brain activity looks like when you just think about speaking,” explains lead author Erin Kunz of Stanford University.

“For people with severe speech and motor impairments, BCIs capable of decoding inner speech could help them communicate much more easily and more naturally.”

Movement decoding to inner speech

BCIs already help people with disabilities by interpreting brain signals that control movement.

These systems operate prosthetic devices or type text by decoding attempted speech – when users engage speech muscles but produce unintelligible sounds.

This is faster than eye-tracking but still demands effort, which can be tiring.

The Stanford team explored decoding purely imagined speech. “If you just have to think about speech instead of actually trying to speak, it’s potentially easier and faster for people,” says co-first author Benyamin Meschede-Krasa.

How the study worked

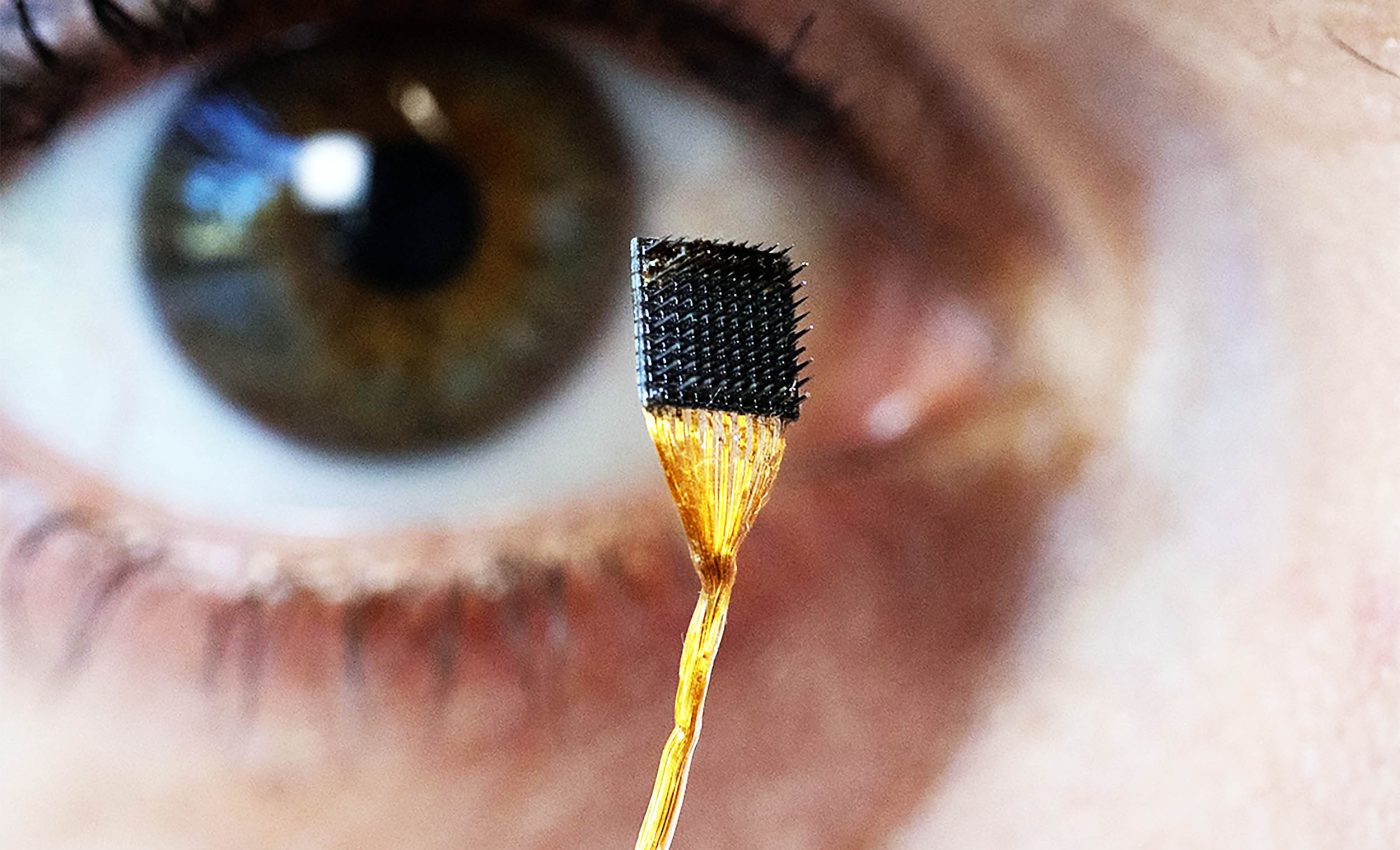

Four participants with severe paralysis from ALS or brainstem stroke took part. Researchers implanted microelectrodes into the motor cortex, a speech-related brain area.

Participants either attempted speaking or imagined saying words. The two tasks activated overlapping regions, though inner speech produced weaker signals.

The researchers also observed that imagined speech involved reduced activity in non-primary motor areas compared to attempted speech, suggesting more streamlined neural pathways.

Their models could reliably distinguish between the two types of speech despite these similarities.

Decoding and unexpected findings

Artificial intelligence models, trained specifically on data from participants’ imagined speech, demonstrated the ability to decode complete sentences from a remarkably large vocabulary of up to 125,000 words, achieving an accuracy rate of 74%.

In addition to decoding prompted sentences, the system was sensitive enough to pick up on unplanned, spontaneous mental activity.

For example, when participants were asked to count the number of pink circles on a screen, the BCI detected the numbers they silently tallied, even though they were never instructed to think them.

This finding indicates that imagined speech generates distinct and identifiable neural patterns, even without direct prompting or deliberate effort from the user.

Furthermore, the research revealed that inner speech signals tend to follow a consistent temporal pattern across multiple trials.

In other words, the timing of brain activity during silent speech remained stable each time the participants imagined speaking.

This reliable internal rhythm could be an important factor in refining future decoding algorithms, as it provides a predictable framework for translating thought into text with greater accuracy and speed.

Distinguishing speech types

While the brain activity patterns for attempted speech and inner speech showed many similarities, the researchers discovered that there were still consistent and measurable differences between them.

These differences were significant enough for the system to accurately tell the two apart, even when the signals came from overlapping brain regions.

According to Frank Willett, this distinction opens the door for developing BCIs that can selectively respond to one type of speech over the other.

In practical terms, this means a device could be programmed to completely ignore inner speech unless the user chooses to activate it.

To give users full control, the team designed a password-based activation method. In this setup, decoding of inner speech would remain locked until the person silently thought of a specific phrase – in this case, “chitty chitty bang bang.”

When tested, the system successfully recognized the password with more than 98% accuracy, ensuring both security and intentional use.

Future of inner speech decoding

Summing it all up, your inner voice leaves a measurable trace in the brain’s speech-planning system. With thin sensors and well-trained models, that trace can be turned into text in real time, without any physical movement.

Free-form inner speech decoding remains a challenge due to variability in brain activity and limited vocabulary coverage.

However, the authors highlight that higher-density electrode arrays, advanced machine learning models, and integration of semantic context could improve accuracy.

“The future of BCIs is bright,” Willett says. “This work gives real hope that speech BCIs can one day restore communication that is as fluent, natural, and comfortable as conversational speech.”

It’s not telepathy, and it’s not flawless, but it points toward a faster, more humane way for people who can’t speak to be heard – using the voice they already have, the one inside.

The study is published in the journal Cell.

—–

Like what you read? Subscribe to our newsletter for engaging articles, exclusive content, and the latest updates.

Check us out on EarthSnap, a free app brought to you by Eric Ralls and Earth.com.

—–