Smart bracelet is capable of detecting emotions with 96% accuracy, similar to a lie detector

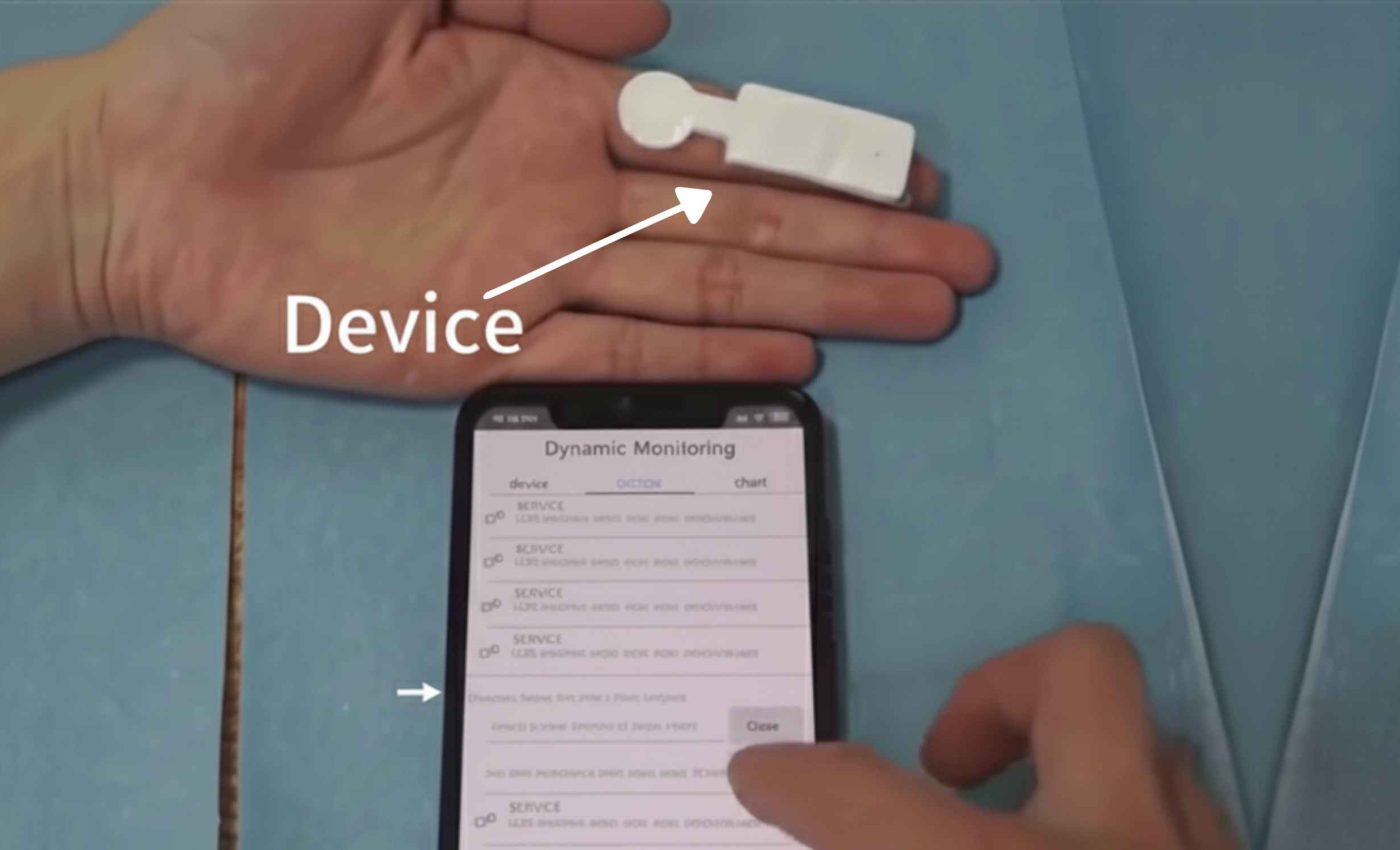

A small, stretchy sticker that sits on the skin can read the body’s signals and sort out real emotions from acted ones. It tracks temperature, humidity, heart rate, and blood oxygen, then uses machine learning to make a call.

Many people keep a straight face while feeling something very different inside. A sensor that listens to the body rather than the face could help clinicians learn what is actually going on.

Sticker has sensor that reads emotion

The project comes from Huanyu Cheng, an associate professor of engineering science and mechanics at Pennsylvania State University. His team built a soft device that bends and stretches with the skin while staying reliable.

The patch is a biosensor, a wearable tool that turns physical changes into electrical signals the system can analyze.

It also checks blood-oxygen level, called SpO2 – the percentage of oxygen carried by the blood, because oxygen levels shift with arousal and stress.

Layers of platinum and gold are cut into wavy shapes so the circuits keep working when bent, while carbon nanotube channels sense humidity with high sensitivity.

Rigid and waterproof barrier layers protect the temperature and humidity elements so facial-motion strain and moisture do not pollute the readings, details that are laid out in the technical paper.

A tiny wireless module powers the system and streams data to a phone or the cloud in real time. Identifiers are not recorded, only signals, which reduces the risk of exposing personal information.

How the AI was trained

In tests with volunteers, the model classified acted expressions with 96.28 percent accuracy and detected emotions sparked by video clips with 88.83 percent accuracy.

The training set included eight people imitating six basic expressions one hundred times each, and a separate validation included three different people.

“Relying only on facial expressions to understand emotions can be misleading,” said Cheng. The approach pairs face movement with internal signals that are less open to conscious control.

The software uses a neural network, a set of connected math functions that learn patterns from data rather than rule based instructions.

The sensor’s software learns to associate links between signal patterns and specific emotions, then outputs a label for each short time window.

The patch itself records strain from facial muscles along with skin temperature, humidity, and heart related signals.

That blend helps the model avoid false calls when a person tries to fake a smile or suppress a frown.

Multimodal approach matters

A growing body of work shows that combining peripheral signals improves recognition compared with any single channel alone.

One academic review reports higher accuracy when features from photoplethysmography, skin temperature, and skin conductance are fused.

This sticker follows that logic by separating, then fusing, signals that map to emotional arousal. Decoupling reduces cross talk, so a humid cheek does not distort temperature or strain readouts.

The field itself is a branch of affective computing, the study of systems that sense and interpret emotion with sensors and algorithms.

Systems that do not require a camera can also lessen bias from lighting and skin tone, and they work in low light.

Interpreting the body is not foolproof. The same jump in heart rate can reflect surprise, fear, or a sprint to catch a bus, so context still matters.

Emotion sensor in the real world

Remote care is a clear use case. Another recent review of remote patient monitoring trials found improvements in safety, adherence, and mobility, with cost trends moving lower in several programs.

This study is early-stage and used a small, specific sample. Performance in a clinic, at home, and during day long wear still needs to be measured.

Design choices about privacy and fairness will matter if devices like this scale. Independent guidance urges clear consent, encryption, and audits for bias when wearable data flows into AI systems.

The team points to other use cases that do not rely on speech. Potential examples include support for nonverbal patients, better tracking of dementia related behaviors, overdose detection, and safety monitoring in athletics.

A device that reads multiple body signals gives clinicians an extra signal channel to compare with words and facial cues. That does not replace clinical judgment, it adds objective streams that can be trended over time.

Therapists and primary care teams could use alerts to check in before stress escalates. Families might get better information during telehealth visits when a loved one struggles to describe what they feel.

What happens next

Larger trials need more diverse participants, including wider age ranges and health conditions, to test generalizability.

Studies should measure wear comfort, battery life, and signal quality during normal daily movement across hours rather than minutes.

Engineers will keep shrinking the footprint and optimizing energy harvest, so the patch stays comfortable through long wear. Clinicians and ethicists will need protocols for consent, data sharing, and when to act on an emotion alert.

The path to clinical use also runs through regulatory review. Evidence must show safety, accuracy, and clear benefit over current practice before routine deployment.

The study is published in Nano Letters.

—–

Like what you read? Subscribe to our newsletter for engaging articles, exclusive content, and the latest updates.

Check us out on EarthSnap, a free app brought to you by Eric Ralls and Earth.com.

—–