Implant allows a paralyzed man to control an object using another person's hand

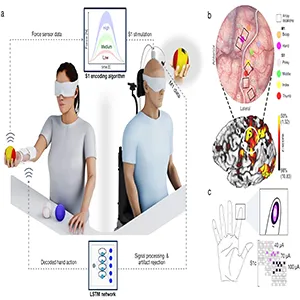

A new study documents a man with paralysis who moves and feels objects through the use of another person’s hand via brain implant. The work ran in New York with a fully implanted system that translated his intention into someone else’s grasp.

In shared tasks, the team reports a 94 percent success rate when pouring water, a basic test of hand control. That single number signals stable performance during a simple but meaningful activity.

The work was led by Chad Bouton at the Feinstein Institutes for Medical Research in New York. His team linked one person’s brain signals to another person’s hand and returned touch to the sender.

The participant imagined closing his hand while seated across from a partner. A brain-computer interface decoded that intent in real time.

The partner wore flexible electrodes on the forearm while keeping her own muscles passive. Those electrodes powered the closing and opening of her hand on cue.

Bouton and colleagues have pursued clinical approaches that reconnect brain, body, and spinal cord after injury. Their focus is on restoring useful function that holds up beyond short demos.

The team frames the work as a cooperative model. One person’s intention helps another complete daily actions, while both practice goal-directed movement together.

How the human avatar works

Implanted arrays recorded activity from motor and sensory areas that map to the hand. The system translated those spikes into a simple open or close command for the partner’s forearm muscles.

To return touch, the setup used intracortical microstimulation, tiny pulses delivered to the brain’s touch area, that tracked the forces at the partner’s fingertips. Stronger squeeze meant stronger pulses.

Hand forces came from small sensors on the partner’s thumb and index finger. The computer adjusted stimulation so the sender felt light, medium, or strong pressure on his own missing sense.

Paralysis implant study results

During blindfolded trials, the sender identified the firmness of three objects with 64 percent accuracy. That result came from touches relayed through the partner’s hand to his own brain.

In functional tests with another participant who had paralysis, shared control raised the success rate of a bottle pour to 94 percent. The same protocol without help succeeded far less often.

Those numbers are early, but they point to repeatable performance. They also show that returning sensation helped the sender tell soft from hard, even without seeing the object.

Restoring sensation is not a bonus feature, it is a control signal. Prior research showed that delivering touch to the brain improved the speed and accuracy of a person controlling a robotic arm.

The present work applies that logic to two humans working as a pair. Touch returns to the sender, while motion happens in the partner’s hand.

Earlier clinical work showed that a person could use implanted signals to command their own paralyzed arm through electrical muscle activation. That study established a path for intention-driven muscle control.

This avatar builds on that foundation with a twist. The movement happens in another person, and the tactile loop closes back to the original brain.

Next steps for paralysis implants

The approach is invasive, and that matters. Implant decisions weigh likely benefit, duration of use, and surgical risk.

The lead team has shown that gains can last beyond the lab. A 2024 update documented daily improvements for the same participant after the earlier phase of the trial.

Future studies will need to scale the number and placement of sensors on the partner’s hand. More precise sensory maps in the sender’s brain could improve object recognition.

Ethical questions are not side issues. Any system that lets one person control another’s movements needs clear guardrails, explicit consent, and transparent logs of who issued which command.

Remote collaboration is a tempting angle. The same setup could, in theory, connect people across distance, with the sender guiding and feeling through a willing partner.

A practical checkpoint will be training time and reliability outside controlled settings. Battery life, secure wireless links, and robust decoding in the presence of noise all matter to use in the real world.

Why it all matters

This is not mind reading. The system only translates deliberate motor intent that the sender practices and the computer recognizes.

It is also not magic. It is the disciplined pairing of recorded brain activity, patterned muscle stimulation, and carefully tuned sensory pulses.

If the field keeps returning touch along with movement, cooperation may become a standard part of rehab. Two people could build skills faster together than either could alone.

If future versions reduce surgical burden, more paralysis candidates could try it. The team has suggested noninvasive routes for some partners, which would broaden access if performance holds.

The study is published in medRxiv.

—–

Like what you read? Subscribe to our newsletter for engaging articles, exclusive content, and the latest updates.

Check us out on EarthSnap, a free app brought to you by Eric Ralls and Earth.com.

—–