New VR glasses are credit-card thin and create lifelike holographic visuals

Virtual reality keeps improving, yet most headsets still clamp a heavy box to your face and flatten scenes into stereo billboards. A new prototype set of holographic glasses from Stanford engineers replaces that bulk with a slim optical sandwich.

This fascinating invention is only 0.12 inches (3 millimeters) thick, and the visuals the glasses create look “deep” instead of layered.

At Stanford University, electrical engineer Gordon Wetzstein leads the project, working with postdoctoral scholar Suyeon Choi and collaborators.

Their goal is to pass what colleagues call a Visual Turing Test. This is a standard borrowed from computing, in which a viewer cannot tell a natural object from a digitally created one.

Holographic glasses make better VR

Invented by Nobel laureate Dennis Gabor, holography stores both the brightness and timing of light waves, letting a flat surface rebuild a full three-dimensional wavefront.

Because every pixel bends light rather than just shining it, a holographic screen can place virtual objects at any distance without the eye strain that plagues stereoscopic displays.

“This technology offers capabilities that we can’t get with any other type of display, in a package that is much smaller than anything on the market today,” said Wetzstein.

The team chose holography not for novelty but to match human depth cues that require subtle phase information.

Current commercial headsets imitate depth by sending separate images to each eye, but both eyes still focus on the same flat panel. Holography restores natural focusing.

A kitten can sit on your lap while a planet floats miles away, and each will appear crisp at its own distance.

How these holographic glasses work

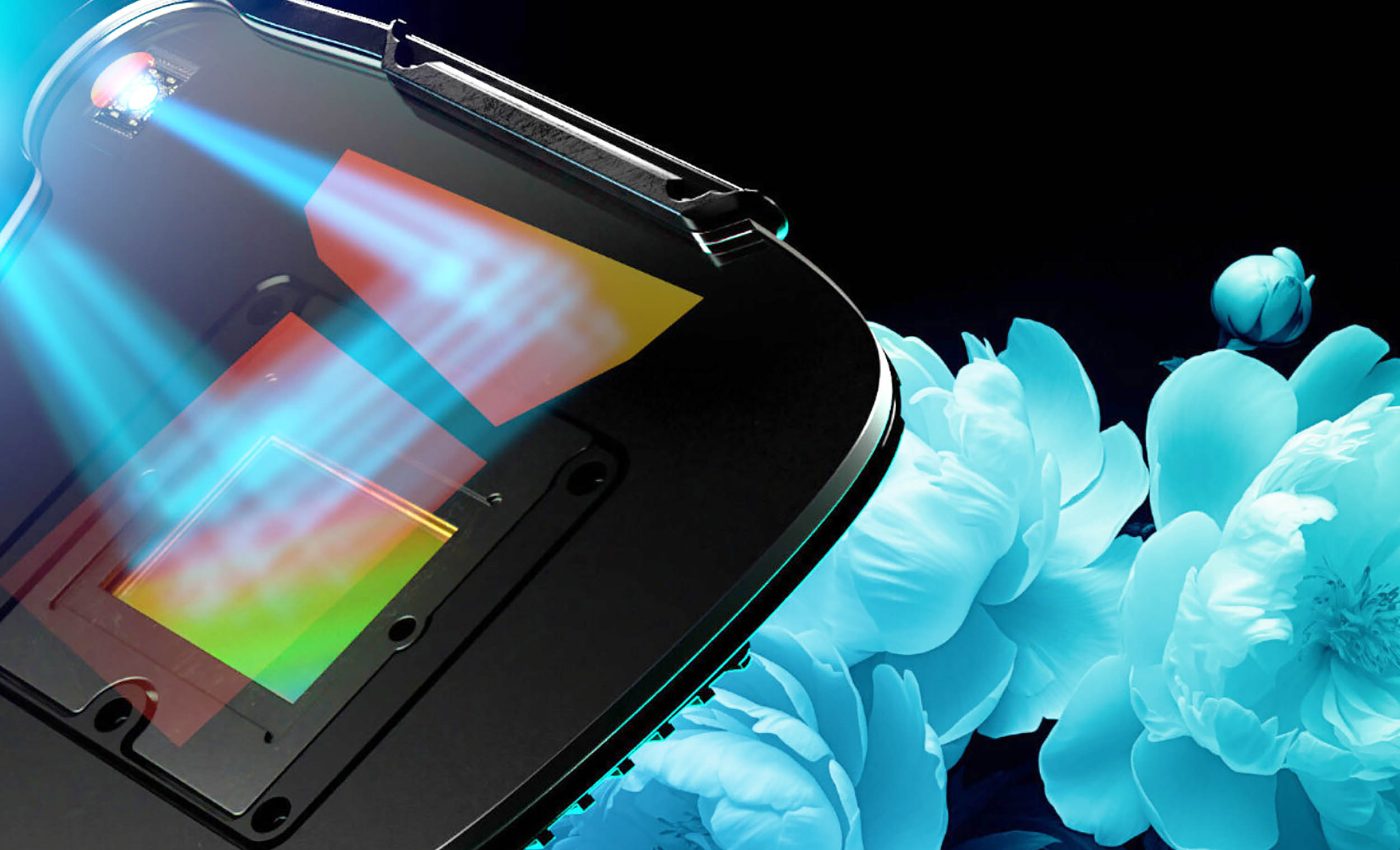

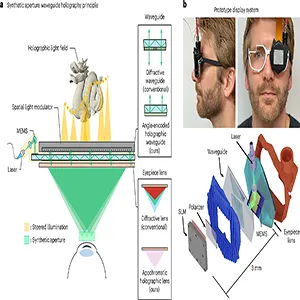

Miniature lasers feed a micro electromechanical mirror that steers light into a custom waveguide that is only 0.02 inches (0.5 millimeters) thick.

Inside that glassy slab, volume Bragg gratings bounce and expand the beam before it exits toward a reflective spatial light modulator that sculpts the final hologram.

The entire optical stack, from input face to eyepiece, measures just 0.12 inches (3 millimeters).

That leanness means the device could fit frames no bigger than ordinary eyeglasses and rest on the bridge of the nose for hours without neck fatigue.

Choi calls the experience mixed reality rather than virtual because real scenery seeps through untreated areas of the lenses.

A digitally summoned sculpture can sit on your real coffee table while sunlight streams around its edges.

How light moves in holographic glasses

Conventional surface relief gratings leak light in both directions, washing images with glare.

The Stanford design swaps those gratings for angle encoded volume Bragg gratings that diffract only one way and only within a narrow color band, thus cutting unwanted brightness to the eye.

Three sets of gratings funnel red, green, and blue laser light through identical paths, minimizing chromatic blur.

After two reflections, the beam lands on the modulator and the modulated wave then passes through a holographic eyepiece that puts the picture at optical infinity.

The waveguide approach also eliminates large, curved lenses, so there is no need for the physical depth normally required to bring a screen into focus. Flattening the optics is what allows the package to be as thin as a credit card.

Wide natural view

Optical designers crave a high étendue, the product of field of view and eyebox size. A large number means the user can move pupils freely while still seeing a wide scene, a combination that is usually impossible in thin devices.

The prototype delivers a 38 degree diagonal field and a 0.35 by 0.31 inch (8.8 by 7.9 millimeter) eyebox. These values are two orders of magnitude higher than earlier holographic glasses.

Users can glance around the virtual scene without losing sharpness, a property that makes the imagery feel fixed in space rather than painted on the retina.

Étendue grows here because a scanning laser creates a synthetic aperture. Ten by ten overlapping sub pupils are stitched together by software. Each patch carries partial information, yet the sum recreates a seamless light field.

AI sharpens holographic glasses visuals

To polish those patches, the team trained an implicit neural network that learns how partially coherent light meanders inside the glass.

The algorithm maps four dimensional spatio-angular coordinates to the complex wavefront that finally leaves the guide, which trims optical errors in real time.

Earlier models relied on convolutional networks and assumed perfect coherence, an unrealistic simplification.

The new network needs roughly one-tenth of the training data yet predicts wave propagation more accurately across the entire synthetic aperture.

Artificial intelligence also handles phase retrieval, the math that converts a target scene into a pattern of voltages for the modulator.

By supervising on-light field rather than ray tracing errors, the system maintains image fidelity, even when eye position or pupil size changes.

Testing in the lab

Captured photos show a Snellen equivalent resolution of 1.2 arcminutes, which is close to 20/20 vision. Text remains legible as the camera focuses from infinity to 16 inches (40 centimeters), demonstrating that vergence and accommodation cues line up.

“This is the best 3D display created so far and a great step forward,” Wetzstein remarked in the lab. His statement comes with caution.

Volume three of his “trilogy” aims for a commercial product, a milestone he predicts is several years away.

Bench tests also reveal little vignetting while sliding a mock eye laterally across the entire 0.35 inch (8.9 millimeter) eyebox.

Vertical motion tolerance is almost as forgiving, which will be a relief for users whose headsets inevitably slip during normal movement.

Holographic glasses, VR, and the future

Education, remote collaboration, and telemedicine could be early winners because lightweight glasses lower the barrier to all day wear.

Architects might sketch holographic beams into a construction site, and surgeons could overlay imaging data directly on a patient without glancing at monitors.

Challenges remain. Higher frame rates demand faster modulators, and the laser driven backlight must pass regulatory safety checks.

Mass manufacturing of volume Bragg gratings with nanometer precision is still expensive.

Even so, shrinking a mixed reality display to the scale of everyday eyewear edges digital content toward physical parity. Passing a visual exam once reserved for humans no longer feels far fetched.

The study is published in Nature Photonics.

—–

Like what you read? Subscribe to our newsletter for engaging articles, exclusive content, and the latest updates.

Check us out on EarthSnap, a free app brought to you by Eric Ralls and Earth.com.

—–