New robots can use multisensory perception to map rugged terrain

Robots that rely only on cameras or LiDAR (light detection and ranging) can stumble when foliage hides the trail, mud muffles their steps, or dense underbrush blocks their line-of-sight.

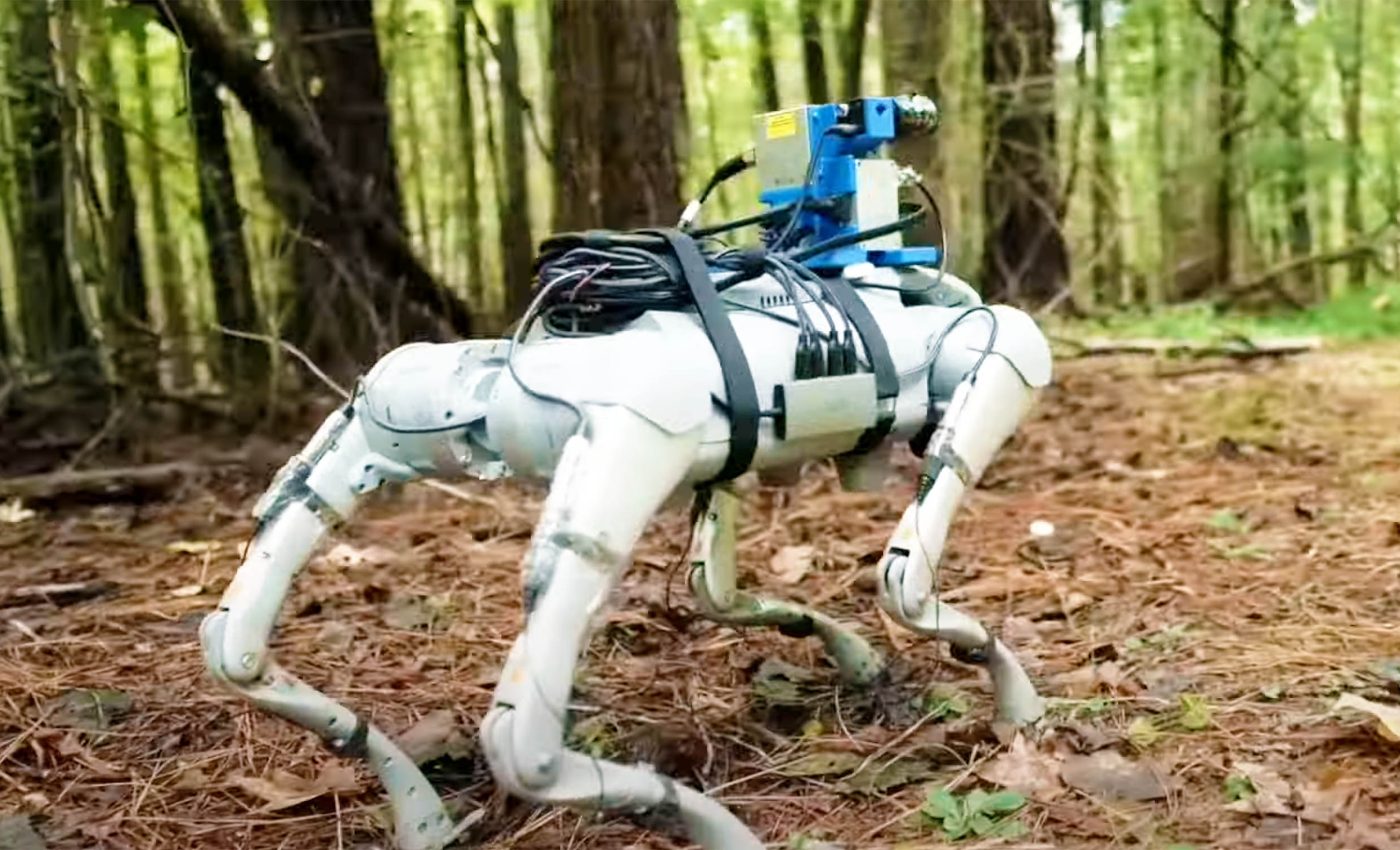

To overcome this problem, a research team from Duke University has created a framework called WildFusion. It equips a four-legged robot with the additional senses of vibration and touch, enabling it to build richer maps and choose safer footholds in real forests.

WildFusion expands robot senses

Conventional outdoor robots treat the world as a set of points captured by spinning LiDAR or a stereo camera. This sparse view breaks down whenever vegetation hides a path or when reflective surfaces confuse laser returns.

“Typical robots rely heavily on vision or LiDAR alone, which often falter without clear paths or predictable landmarks,” said Yanbaihui Liu, the lead student author and a second-year Ph.D. student at Duke.

“Even advanced 3D mapping methods struggle to reconstruct a continuous map when sensor data is sparse, noisy, or incomplete, which is a frequent problem in unstructured outdoor environments. That’s exactly the challenge WildFusion was designed to solve.”

WildFusion combines the usual RGB camera and LiDAR with contact microphones near each footpad, force-sensitive tactile skins on the limbs, and an inertial measurement unit.

As the machine trots, microphones record the unique acoustic signatures of every step – the crunch of dry twigs, the thud of exposed roots, the squish of saturated moss.

Tactile sensors log the pressure profile so the controller knows immediately if a foothold is solid or slippery. The inertial unit reports pitch, roll, and yaw, giving the controller a sense of whether the chassis is tilting dangerously.

A map in the robot’s head

Each sensory stream passes through a dedicated neural encoder that condenses raw values into a compact vector.

Those vectors are fused inside a deep learning architecture that represents the environment not as disconnected dots, but as a smooth mathematical field – a technique known as an implicit neural representation.

Because the model fills gaps between sparse observations, the robot can infer obstacles hidden behind branches or predict sinkholes obscured by leaves.

“WildFusion opens a new chapter in robotic navigation and 3D mapping,” said Boyuan Chen, a professor of mechanical engineering and materials science, electrical and computer engineering, and computer science at Duke.

“It helps robots to operate more confidently in unstructured, unpredictable environments like forests, disaster zones, and off-road terrain.”

Testing WildFusion in real terrain

To test the system outside the lab, the team marched its quadruped through Eno River State Park in North Carolina. The course included dense laurels, sandy creek beds, gravel service roads, and tangles of woody debris.

Vision alone proved unreliable when dappled sunlight fooled depth estimates, yet WildFusion’s fused map still predicted stable footholds.

“Watching the robot confidently navigate terrain was incredibly rewarding,” Liu said. “These real-world tests proved WildFusion’s remarkable ability to accurately predict traversability, significantly improving the robot’s decision-making on safe paths through challenging terrain.”

Chen likens the process to human intuition. “Think of it like solving a puzzle where some pieces are missing, yet you’re able to intuitively imagine the complete picture,” he explained.

“WildFusion’s multimodal approach lets the robot ‘fill in the blanks’ when sensor data is sparse or noisy, much like what humans do.”

Robots map the woods

Although demonstrated in a state park, the architecture is modular. The researchers plan to bolt on thermal imagers, humidity probes, or chemical sensors so future versions can detect heat from survivors in collapsed buildings or gauge soil moisture for agricultural scouting.

With minimal wiring changes, the same fusion core could also guide tracked vehicles across rubble or aerial drones beneath canopies where GPS signals fades.

“One of the key challenges for robotics today is developing systems that not only perform well in the lab but that reliably function in real-world settings,” Chen said. “That means robots that can adapt, make decisions, and keep moving even when the world gets messy.”

When humans can’t go

Robots outfitted with WildFusion could reduce risk in hazardous jobs – from scouting wildfire perimeters to inspecting aging power lines that snake through thick forests.

If a landslide blocks a road after an earthquake, a multisensory robot could hike in, map the debris, and relay data before human crews arrive.

Offshore wind farms and mountain pipelines, often shrouded in fog or framed by moving foliage, pose similar navigation challenges that a vibration-savvy machine could handle.

Robots that feel

The project underscores a broader trend in robotics: giving machines bodies that truly feel the world. Engineers have long taught algorithms to see, but as WildFusion shows, layering subsurface vibrations and tactile feedback can elevate robotic intuition.

“It helps robots to operate more confidently in unstructured, unpredictable environments,” Chen noted.

With its ability to fuse sound, touch, vision, and motion into one seamless environmental model, WildFusion pushes robots a step closer to the nuanced perception humans deploy on a casual weekend hike.

This amazing technology opens the door to operations in the many places where clear paths and predictable landmarks are the exception, not the norm.

Click here to watch a video of WildFusion in action…

The study will be presented at the IEEE International Conference on Robotics and Automation (ICRA 2025) in Atlanta.

—–

Like what you read? Subscribe to our newsletter for engaging articles, exclusive content, and the latest updates.

Check us out on EarthSnap, a free app brought to you by Eric Ralls and Earth.com.

—–